How To Use Core Image With Live Camera To Detect Faces

Face Detection and Recognition With CoreML and ARKit

Implementing a face detection feature with ARKit and face recognition with CoreML model

An updated version is bachelor in the following article:

Create a Single View Application

To begin, nosotros need to create an iOS project with a single view app:

At present that y'all have your projection, and since I don't similar using storyboards, the app is done programmatically which means no buttons or switches to toggle, just pure code 🤗.

You accept to delete main.storyboard and set your AppDelegate.swift file like and then:

Make sure to remove the storyboard "Primary" from the deployment info.

Create Your Scene and Add It to the Subview

We only have one ViewController, which will be our chief entry point for the application.

At this stage, we demand to import ARKit and instantiate an ARSCNView that automatically renders the live video feed from the device camera as the scene groundwork. It also automatically moves its SceneKit camera to friction match the real-world motility of the device, which ways that we don't need an anchor to runway positions of objects nosotros add together to the scene.

We need to give information technology the screen bounds and so that the photographic camera session takes the whole screen:

In the ViewDidLoad method, we are going to set up a few things, such as the delegate, and nosotros likewise need to encounter the frame statistics in order to monitor frame drops:

Outset an ARFaceTrackingConfiguration session

Now we need to outset a session with an ARFaceTrackingConfiguration, this configuration gives the states access to front-facing TrueDepth camera that is only available for iPhone X, Xs and Xr. Hither's a more detailed explanation from Apple Documentation:

A confront tracking configuration detects the user's face up in view of the device's front-facing camera. When running this configuration, an AR session detects the user's face (if visible in the forepart-facing camera epitome) and adds to its list of anchors an ARFaceAnchor object representing the face. Each face up anchor provides information about the face's position and orientation, its topology, and features that depict facial expressions.

Source: Apple

The ViewDidLoad method should look like this:

Train a Face recognition model

At that place are multiple ways to create a .mlmodel file that is compatible with CoreML these are the common one:

- Turicreate : it's python library that simplifies the development of custom machine learning models, and more importantly yous can export your model into a .mlmodel file that can be parsed by Xcode.

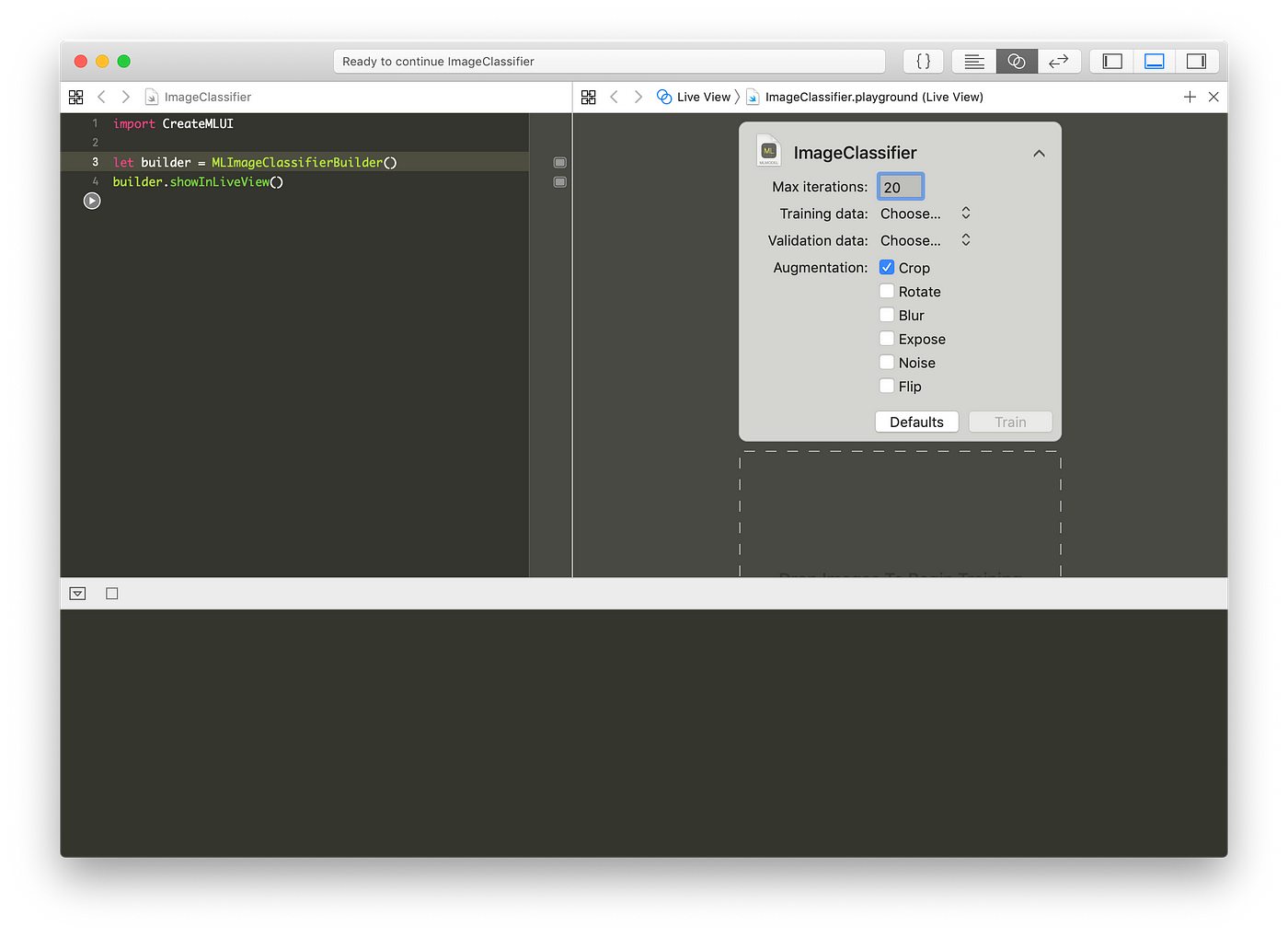

- MLImageClassifierBuilder(): information technology'southward a build-in solution available out of the box with Xcode that gives access to pretty much a drag and drib interface to railroad train a relatively simple model.

I have created multiple models to test both solutions, since I don't have a big dataset, I decided to use MLImageClassifierBuilder() with a gear up of 67 images that are 'Omar MHAIMDAT' (which is my name) and a set of 261 faces of 'Unknown' that I found on unsplash .

Open playground and write this code:

I would recommend setting the max iterations at 20 and add a Crop Augmentation which will add 4 instances of cropped images for each image.

Capture Photographic camera Frames and Inject Them Into the Model

We need to extend our ViewController with the scene consul, ARSCNViewDelegate. We need ii delegate methods, one to set the face detection and the other ane to update our scene when a face detected:

Face detection:

Unfortunately, the scene doesn't update when I open my eyes or mouth. In this instance, we demand to update the scene accordingly.

Update the scene:

We have the whole face geometry and mapping, and we update the node.

Get the photographic camera frames:

This gets interesting because ARSCNView inherits from AVCaptureSession, pregnant we can go a cvPixelFuffer that we tin can feed our model.

Here'due south the easy way to get information technology from our sceneView attribute:

Inject camera frames into the model:

Now that nosotros tin can notice a face and have every camera frame, we are set up to feed our model some content:

Show the Proper noun Above the Recognized Face

The last and probably the most frustrating part is to project a 3D text above the recognized face up. If you think about it, our configuration is not as powerful equally the ARWorldTrackingConfiguration which gives access to numerous methods and classes. We are instead using the front end-facing camera and very few things can be achieved.

Still, we tin withal project a 3D text on the screen, though information technology won't track the face move and change appropriately.

Now that we take the SCNText object, we need to update it with the respective face and add together it to the rootNode:

Final Result:

Hither'southward the final issue with the face detection and recognition.

If yous liked this slice, please handclapping and share it with your friends. If you have whatsoever questions don't hesitate to send me an e-mail at omarmhaimdat@gmail.com.

This projection is available to download from my Github account

Download the projection

Source: https://betterprogramming.pub/face-detection-and-recognition-with-coreml-and-arkit-8b676b7448be

Posted by: johninattleaces.blogspot.com

0 Response to "How To Use Core Image With Live Camera To Detect Faces"

Post a Comment